Resume Automation 2/2

Now that our RAG system is set up, we'll use it to generate personalized CVs and motivation letters.

We'll use Langchain to:

- Load relevant RAG CVs and motivation letters as context

- Input job descriptions from LinkedIn into our prompt

In this project, we won't generate these documents entirely from scratch. Experience has shown that such an approach often yields mixed results. Instead, we'll use templates with placeholders that we want to be generated.

import pandas as pd

import numpy as np

import json

from datetime import datetime, timedeltaSet LLMs and load job listing

Load job listing

Note:

For scraping jobs, scrapy, beautifulsoup, chronium, selenium are a must.

However, for popular site, chances are someone did work on an API that would work in way better ways than anything I could be doing.

Overall, if something exist on ApifyStore, you might find a free alternative on github.

To generate a CSV file with job data from linkedin, I used the LinkedIn API for Python which shows a nice track records in stars and users.

!pip install linkedin-api

job_listing = pd.read_csv("listing.csv")Import vector store and documents

In the following example, I load an existing Chroma vectorstore created using kotaemon.

To see how this is done, please refer to Resume-Automation-1-2-RAG

from langchain_chroma import Chroma

from langchain_openai import OpenAIEmbeddings

from langchain.globals import set_verbose, set_debug

# Verbose is used to check and /or debug chains and resulting prompts

set_verbose(False)

set_debug(False)Embeddings are numerical representations of real-world objects that machine learning (ML) and artificial intelligence (AI) systems use to understand complex knowledge domains like humans do. We used those embedding to create our vectorstore and we must use them here to bind them when intantiating it.

To access OpenAI embedding models you’ll need to create a/an OpenAI account, get an API key, and install the langchain-openai integration package.

embeddings = OpenAIEmbeddings(

model="text-embedding-3-large",

api_key = API_KEY

)

vectorstore = Chroma(collection_name="index_1", persist_directory="./user_data/vectorstore/", embedding_function=embeddings)Initiate LLM

from langchain_openai import ChatOpenAII will divide the process into two separate instances, each using a different LLM:

- CV generation

- Motivation letter creation

I'll initialize both LLMs using the OpenAI API with the "gpt-3.5-turbo" model, which is significantly more cost-effective to run.

from langchain_core.pydantic_v1 import BaseModel, Field

from typing import Optional

retriever = vectorstore.as_retriever()For the CV LLM, I experimented with BaseModel and bind_tools. This approach allows me to structure the output following a specific class using pydantic. You can find more information about this method in the output_parsers documentation.

However, I should note that this was purely for experimentation. A simpler JsonOutputParser without pydantic would have worked just as well for our purposes.

class CvInputs(BaseModel):

"""placehoders for the CV templates: branding (personal branding statement) and skills (essential skills relevant for the position)"""

branding: Optional[str] = Field(

default=None,

description="[2-3 sentence statement]"

)

skills: Optional[str] = Field(

default=None,

description="""**[Category Name]**: [Skill 1] | [Skill 2] | [Skill 3] n [Continue for all categories]"""

)

llm_model_cv = ChatOpenAI(

model="gpt-3.5-turbo",

temperature=0,

timeout=20,

api_key = API_KEY).bind_tools([CvInputs])llm_model_ml = ChatOpenAI(

model="gpt-3.5-turbo",

temperature=0,

timeout=20,

api_key = API_KEY)Generate documents

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate, SystemMessagePromptTemplateCVs

In order to generate a CV, we need to 2 destinct parts:

- branding: a personal branding statement

- skills: essential skills picked from my CV and relevant for the role

To do so, we are going to use ChatPromptTemplate. In its most basic configuration, ChatPromptTemplate takes a system prompt, basically the instsruction for our LLM, and a human prompt, our input question.

Note that Claude.ai is a powerful ally when it come to generate a good prompt.

Another approach could also be generating serveral prompt and prompt versioning using LangSmith to compare result accuracy.

the prompt

from langchain.output_parsers.openai_tools import JsonOutputToolsParserSYSTEM_TEMPLATE = """You are an advanced ATS (Applicant Tracking System) specialist with extensive experience in technical recruitment for software engineering, data science, and big data roles. Your task is to perform two analyses:1. Create structured skill categories2. Generate a personal branding statementANALYSIS PART 1 - SKILL CATEGORIZATIONRules:1. Skill Extraction: - Extract ONLY explicitly mentioned skills from CONTEXT and not JOB DESCRIPTION - Match skills to job requirements - Categorize by technical domain2. Categorization Requirements: - Create 5-6 distinct categories - Include 2-3 relevant skills per category - Focus on concrete tools/technologies - Avoid inferring skills not explicitly stated3. Format Rules: - Bold category names - Separate skills with vertical bars (|) - Keep everything single-line per categoryCategories to Consider:- Programming Languages- Frameworks & Libraries- Databases & Storage- Cloud Platforms- Development Tools- Data Processing- Business Tools- Methodologies- Domain Knowledge- CertificationsANALYSIS PART 2 - PERSONAL BRANDINGRules:1. Statement Construction (2-3 sentences): - Lead with strongest alignment between experience and job requirements - Incorporate specific, quantifiable achievements from the context - Use industry-standard terminology - Maintain formal professional tone2. Quality Control: - Verify each claim against the provided context - Flag any potential misalignments or gaps - Ensure all stated qualifications are explicitly supported by contextSTRICT RULES FOR BOTH ANALYSES:- Use ONLY information explicitly stated in the CONTEXT- NO inference or assumptions- NO information from JOB DESCRIPTION should be included in skills list- NO generic/soft skills unless explicitly stated- NO extended descriptions- If critical job requirements lack matching experience in CONTEXT, acknowledge the gap"""HUMAN_TEMPLATE = """CONTEXT:{context}JOB DESCRIPTION:{input}Please provide both the skill categorization and personal branding statement based on the provided guidelines."""system_message_prompt = SystemMessagePromptTemplate.from_template(

SYSTEM_TEMPLATE

)

human_message_prompt = HumanMessagePromptTemplate.from_template(HUMAN_TEMPLATE)

chat_prompt = ChatPromptTemplate.from_messages([

system_message_prompt,

human_message_prompt]

)A few words on chain. Chains refer to sequences of calls—whether to an LLM, a tool, or a data preprocessing step. These chains natively support streaming, async, and batch processing out of the box. They also automatically provide observability at each step.

create_stuff_documents_chain: This chain takes a list of documents, formats them all into a prompt, then passes that prompt to an LLM. It passes ALL documents, so ensure they fit within the context window of the LLM you're using.

create_retrieval_chain: This chain takes in a user inquiry, passes it to the retriever to fetch relevant documents, then passes those documents (along with the original inputs) to an LLM to generate a response.

parser = JsonOutputToolsParser(return_id=True,first_tool_only=True)

chain = create_stuff_documents_chain(llm_model_cv, chat_prompt, output_parser=parser)

rag_chain = create_retrieval_chain(retriever, chain)Run query

Batch query

batches_prompts = [{"input": job_description} for job_description in job_listing.text.tolist()]results = rag_chain.batch(batches_prompts)job_listing = job_listing.join(pd.DataFrame.from_records([x['answer']['args'] for x in results]))job_listing[["branding","skills"]].head()| branding | skills | |

| 0 | Experienced Senior Researcher and Data Scienti… | Programming Languages: Python | JavaScript… |

| 1 | Experienced Senior Researcher and Data Scienti… | Programming Languages: Python | JavaScript… |

| 2 | Experienced Data Scientist specializing in Geo… | Programming Languages: Python | SQL | Grap… |

| 3 | Experienced Senior Researcher & Data Scientist… | Programming Languages: Python | SQL | Java… |

| 4 | Experienced Senior Researcher & Data Scientist… | Programming Languages: Python | SQL | Grap… |

Write in file

Finally, we utilizedocxtplto populate our template. This library leverages jinja2 templating and RichText functionality to format our strings effectively.

from docxtpl import DocxTemplate, RichText

import osdef format_skills(skills_text: str) -> list:

""" Formats skills text into a list of dictionaries with category and skills Each category will be bold in the template """

skills_list = []

for line in skills_text.split('n'):

if line.startswith('**'):

# Extract category and skills

category = line.split('**')[1].split(':')[0]

skills = line.split(':')[1].strip()

# Create rich text for category (bold)

rt = RichText()

rt.add(f"{category}: ", bold=True)

rt.add(skills)

skills_list.append({

'category_skills': rt

})

return skills_listfrom copy import deepcopy

def create_cv_document(cv_data: dict, template_path: str, output_path: str = "cv_output.docx"):

"""Creates a CV document using a template and the provided data."""

context = deepcopy(cv_data)

raise FileNotFoundError(f"Template file not found: {template_path}")

# Load the template

doc = DocxTemplate(template_path)

# Format the skills data

context['skills'] = format_skills(context['skills'])

# Render the template with our data

doc.render(context=context,autoescape = True)

# Save the generated document

doc.save(output_path)

return output_pathtemplate_path = "CV_template.docx" # Path to your templatefor index, row in job_listing.iterrows():

output_path = f"./CV_outputs/{row.slug}_cv.docx"

try:

created_file = create_cv_document(

row[['branding','skills']].to_dict(),

template_path=template_path,

output_path=output_path

)

print(f"✅ CV has been successfully created at: {created_file}")

except Exception as e:

print(f"❌ Error creating document: {str(e)}")✅ CV has been successfully created at: ./CV_outputs/data_architect_cv.docx

✅ CV has been successfully created at: ./CV_outputs/online_data_analyst__cv.docx

✅ CV has been successfully created at: ./CV_outputs/big_data_engineering_cv.docx

✅ CV has been successfully created at: ./CV_outputs/azure_data_engineer_cv.docx

✅ CV has been successfully created at: ./CV_outputs/mobile_phone_data_re_cv.docx

✅ CV has been successfully created at: ./CV_outputs/data_communication_a_cv.docx

✅ CV has been successfully created at: ./CV_outputs/junior_data_engineer_cv.docx

✅ CV has been successfully created at: ./CV_outputs/technical_solutionin_cv.docx

Motivation Letter

To generate a motivation letter, we need two distinct parts:

- Projects: Select specific projects from the RAG that are relevant to the current position.

- Blanks: Fill in details from the job offer, such as position, organization, and department.

Once again, we'll use ChatPromptTemplate.

Unlike our first prompt where we combined both tasks, here we'll run two separate prompts—one for each task. Why? I want to feed the result of the first prompt into the second. We'll explore how to do this later.

from langchain_core.output_parsers import JsonOutputParser

from langchain_core.messages import AIMessage, AIMessageChunk

from langchain_core.runnables import RunnablePassthroughHUMAN_TEMPLATE = """CONTEXT:{context}JOB DESCRIPTION:{input}Please provide statements based on the provided guidelines."""

human_message_prompt = HumanMessagePromptTemplate.from_template(HUMAN_TEMPLATE)In this example, we'll use the JsonOutputParser() without Pydantic. This approach requires the prompt to explicitly describe the desired output format.

Additionally, we'll chain our create_retrieval_chain with a custom function to modify the LLM output according to our needs. Since I want to pass the output of this query to the next step, the default output of create_retrieval_chain—a dictionary with input, content, and answer keys—isn't ideal.

SYSTEM_TEMPLATE_projects = """You are an ATS (Applicant Tracking System) specialist with expertise in recruiting for software engineering, data science, and big data roles. Given the provided context and job description, perform the following:1. Identify 2-3 projects that closely align with the required skills or responsibilities in the job description.Guidelines:- Use only information that is explicitly provided in the context or job description.- Do not add any details not directly supported by the text.- If there is insufficient information to complete any part, state "Insufficient information."Required JSON output schema: "project_1": "For instance, __project #1 description__". string or null "project_2": "Together with __organisation__, __Project #2 description__". string or null"""

system_message_prompt_projects = SystemMessagePromptTemplate.from_template(

SYSTEM_TEMPLATE_projects

)

chat_prompt_projects = ChatPromptTemplate.from_messages([

system_message_prompt_projects,

human_message_prompt]

)

parser = JsonOutputParser()

projects_chain = create_stuff_documents_chain(llm_model_ml, chat_prompt_projects,output_parser=parser)

projects_chain = create_retrieval_chain(retriever, projects_chain)

def simple_ouput(ai_message: AIMessage) -> str:

"""Parse the AI message."""

ai_message['answer']['input'] = ai_message['input']

return ai_message['answer']

projects_chain = projects_chain | simple_ouputSYSTEM_TEMPLATE_blanks = """You are an advanced ATS (Applicant Tracking System) specialist with extensive experience in technical recruitment for software engineering, data science, and big data roles.Perform tasks based on the provided context and job description:1. Fill in the blanks in the following template:I am pleased to learn that [Company] is looking for a [Position] to complete its team [within Department]. Based on the job description, I think this position is perfectly aligned with my core set of skills and interests.nI am currently working at Geodan, a geospatial intelligence R&D and consultancy firm. In our research team, we challenge ourselves using open source data & AI technologies to develop innovative geospatial data analysis. My work involved [specific tasks].{project_1}nWith strong ties to academia, I collaborated on numerous projects and lectures with esteemed institutions such as Amsterdam Municipality, Dutch ministries, TNO, Deltares, the Vrije Universiteit Amsterdam, TU Delft, Utrecht University, the EU Joint Research Centre and more.{project_2}n[Company] captures my interest with its project on [company related projects]. I am confident that my experience in [specific competencies, hard and soft skills] aligns well with the key technologies, such as [companies technologies], that you have implemented across your projects.nAs a [Position], I am confident that I will help foster [key competencies, deliverables] for your [Department]. I am passionate about creative problem-solving and eager to work with colleagues on [key company objectives].nI am highly motivated and believe I can bring a real value to your teams, and as a letter cannot fully reflect my character and my motivation, I would appreciate the opportunity to have an interview with you. If you need more information, do not hesitate to contact me. I am looking forward to hearing from you.nThank you for your time and consideration.nYours Sincerely,nCorentin KusterInstructions:- Fill [Company] with the company name from the job description- Fill [Position] with the job title from the job description- Fill [Department] with the department name if stated in the job description, otherwise leave blank- Fill [company related projects] with specific projects mentioned in the job description- Fill [specific competencies, hard and soft skills] from CONTEXT ONLY and not JOB DESCRIPTION- Fill [companies technologies] with relevant skills and technologies from the job description- Fill [key competencies, deliverables] and [key company objectives] with specific goals or responsibilities from the job description2. Rephrase the overall results, keep the final version within the same size / words count approximately.Rules for both tasks:- Use only information explicitly stated in the context or job description- Do not include any information not directly supported by the provided text- If there is insufficient information to complete any part of the tasks, state "Insufficient information" for that part"""

system_message_prompt_blanks = SystemMessagePromptTemplate.from_template(

SYSTEM_TEMPLATE_blanks

)

chat_prompt_blanks = ChatPromptTemplate.from_messages([

system_message_prompt_blanks,

human_message_prompt]

)

blanks_chain = create_stuff_documents_chain(llm_model_ml, chat_prompt_blanks)

blanks_chain = create_retrieval_chain(retriever, blanks_chain)My 2 query chains are set. We must now chain them so that the output of the 1st is passed within the prompt of the 2nd.

We do it like so:

chain = projects_chain | RunnablePassthrough.assign(blanks=blanks_chain)Run query

Batch

batches_prompts = [{"input": job_description} for job_description in job_listing.text.tolist()]results = chain.batch(batches_prompts)job_listing = job_listing.join(pd.DataFrame([x['blanks']['answer'] for x in results],columns=['motiv']))job_listing[["branding","skills","motiv"]].head()| branding | skills | motiv | |

| 0 | Experienced Senior Researcher and Data Scienti… | Programming Languages: Python | JavaScript… | I am pleased to learn that Boskalis is looking… |

| 1 | Experienced Senior Researcher and Data Scienti… | Programming Languages: Python | JavaScript… | I am pleased to learn that Telus International… |

| 2 | Experienced Data Scientist specializing in Geo… | Programming Languages: Python | SQL | Grap… | I am pleased to learn that NTU International A… |

| 3 | Experienced Senior Researcher & Data Scientist… | Programming Languages: Python | SQL | Java… | I am pleased to learn that ABN AMRO is looking… |

| 4 | Experienced Senior Researcher & Data Scientist… | Programming Languages: Python | SQL | Grap… | I am pleased to learn that NTU International A… |

Write in file

from docxtpl import DocxTemplate, RichText

import osfrom copy import deepcopy

def create_ml_document(cv_data: dict, template_path: str, output_path: str = "cv_output.docx"):

"""Creates a CV document using a template and the provided data."""

context = deepcopy(cv_data)

# Check if template exists

if not os.path.exists(template_path):

raise FileNotFoundError(f"Template file not found: {template_path}")

# Load the template

doc = DocxTemplate(template_path)

# Render the template with our data

doc.render(context=context,autoescape = True)

# Save the generated document

doc.save(output_path)

return output_pathtemplate_path = "motiv_template.docx" # Path to your templatefor index, row in job_listing.iterrows():

output_path = f"./CV_outputs/{row.slug}_motivation.docx"

try:

created_file = create_ml_document(

row[['branding','motiv']].to_dict(),

template_path=template_path,

output_path=output_path

)

print(f"✅ CV has been successfully created at: {created_file}")

except Exception as e:

print(f"❌ Error creating document: {str(e)}")✅ CV has been successfully created at: ./CV_outputs/data_architect_motivation.docx

✅ CV has been successfully created at: ./CV_outputs/online_data_analyst__motivation.docx

✅ CV has been successfully created at: ./CV_outputs/big_data_engineering_motivation.docx

✅ CV has been successfully created at: ./CV_outputs/azure_data_engineer_motivation.docx

✅ CV has been successfully created at: ./CV_outputs/mobile_phone_data_re_motivation.docx

✅ CV has been successfully created at: ./CV_outputs/data_communication_a_motivation.docx

✅ CV has been successfully created at: ./CV_outputs/junior_data_engineer_motivation.docx

✅ CV has been successfully created at: ./CV_outputs/technical_solutionin_motivation.docx

Export to PDF

We can use libreoffice to convert batch of files

sudo apt install libreoffice-common libreoffice-writer

import subprocess

from pathlib import Path

# Find all .docx files in the specified folderinput_files = Path("./CV_outputs/")

command = ["libreoffice", "--headless", "--convert-to", "pdf", "*.docx"]subprocess.run(command,check=True,cwd=input_files.resolve())Conclusion

Here we have it! The system is able to process a large number of job descriptions and generate tailored CVs and motivation letters.

Note that the model used is gpt-3.5-turbo for financial reasons. However, with increasingly performant models such as the recent o1-preview, results promise to be even more impressive.

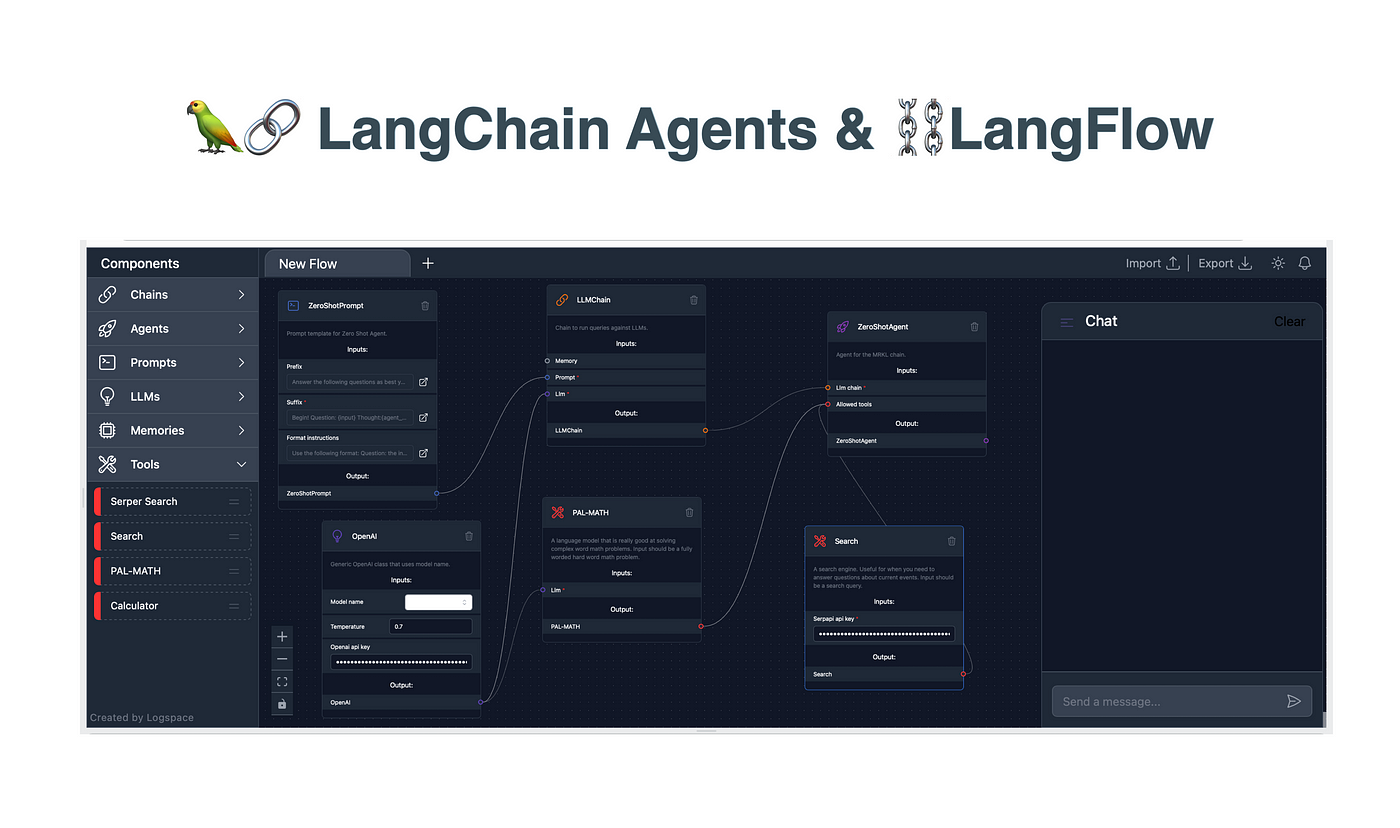

It's also worth mentioning that Langchain has released langFlow, a low-code app builder for RAG and multi-agent AI applications. This means that even those without programming skills can reproduce this system.

NB: I do not advise using the generated output as-is. Taking time to add a personal touch is always recommended, and I've never actually sent any application using this system.